Increase Memory & Performance of Sitecore XM Cloud Development Environments

Accessing all available system memory when using Docker

Start typing to search...

Whenever I’m developing for Sitecore I want the maximum amount of memory possible, down to the last kilobyte. I want to ensure Sitecore has access to all the resources it could possibly need. But in the world of Docker it’s not quite as straight forward. Our machines have memory but Docker is controlling how much memory each container can access.

Lets take a look at how to assign more memory to our containers and increasing the performance of our environments.

Find the docker-compose.override.yml in your XM Cloud project root folder. Ensure you’re using a V2

Docker compose file.

Find the rendering container definition (which for me starts at line 14) and add the

mem_limit property as follows:

rendering:

image: ${REGISTRY}${COMPOSE_PROJECT_NAME}-rendering:${VERSION:-latest}

mem_limit: 8192m

build:

context: ./docker/build/rendering

target: ${BUILD_CONFIGURATION}

This will provision 8 GB for your local rendering host. More than it should need, but if you got it flaunt it.

Find the cm container definition (around line 66) and add the mem_limit property again:

cm:

image: ${REGISTRY}${COMPOSE_PROJECT_NAME}-xmcloud-cm:${VERSION:-latest}

mem_limit: 16384m

build:

context: ./docker/build/cm

The CM container is running Sitecore so this needs the most juice. Here I’ve increased the limit to 16

GB.

You might find an additional mem_reservation option but Windows Docker does not support it.

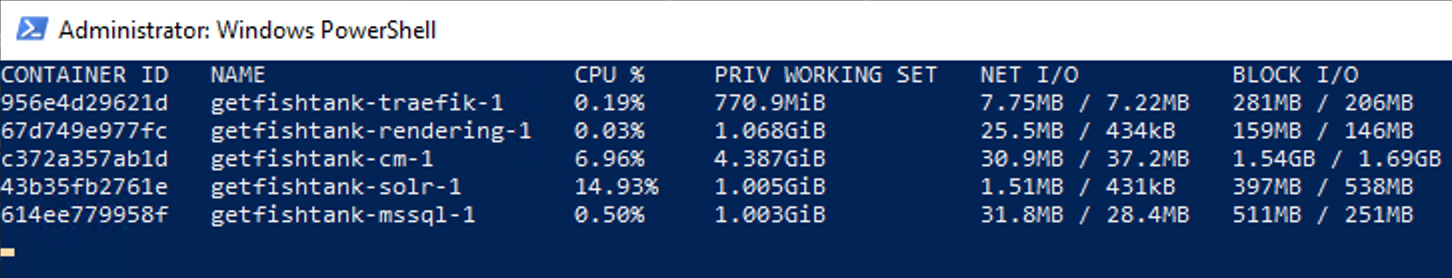

You will have to bring your containers down and back up for the memory changes to take effect. And by running

docker stats you can see how much memory is being used.

Remember we’re defining the maximum memory Docker can allocate for the container, so hopefully these numbers will be under your max but able to push higher than before.

As of writing this XM Cloud starter kit is not using V3, but if you're using Docker Compose V3 the syntax would look something like:

version: "3.8"

services:

cm:

image: ${REGISTRY}${COMPOSE_PROJECT_NAME}-xmcloud-cm:${VERSION:-latest}

deploy:

resources:

limits:

memory: 16384M

reservations:

memory: 8192M

We increased the memory that can be allocated to the rendering container but Node.js by default has a

memory limit of 2GB. So in addition to given the container more memory, we have to explicitly instruct node to access

it.

From your root, open /docker/build/rendering/Dockerfile . Then add a new NODE_OPTIONS

environment variable.

ARG PARENT_IMAGE

FROM ${PARENT_IMAGE} as debug

ENV NODE_OPTIONS=--max-old-space-size=8192

WORKDIR /app

EXPOSE 3000

#ENTRYPOINT "npm install && npm install next@canary && npm run start:connected"

ENTRYPOINT "npm install && npm run start:connected"

Make sure the value in this line ENV NODE_OPTIONS=--max-old-space-size=8192 lines up with

mem_limit for your rendering container.

This is also the fix for any FATAL ERROR Heap Out Of Memory errors you find in your rendering host.

The Apollo mission navigation computer had a total of 78 KB of memory (only 2KB re-writable!). Sonic The Hedgehog fit into a 512 KB cartridge. And now we need gigabytes of memory to run JavaScript. At least now we know to access all the memory we’ve paid for in XM Cloud development environments. Thanks for reading.